李宏毅机器学习笔记-3 Classification,Logistic Regression

Classification

分类算法在我们日常生活中随处可见:

- Credit Scoring

Input: income, savings, profession, age, past financial history ……

Output: accept or refuse - Medical Diagnosis

Input: current symptoms, age, gender, past medical history ……

Output: which kind of diseases - Handwritten character recognition

- Face recognition

Input: image of a face

output: person

Example Application

在这节课程中,李宏毅老师通过宝可梦的HP值,Attack值,SP Atk值,SP Def值,Speed值等一系列值来预测宝可梦属于哪种类型。

How to do Classification?

- Traning data for Classification

Classification as Regression?

Binary classification as example

Training: Class 1 means the target is 1; Class 2 means the target is -1

Testing: closer to 1 → class 1; closer to -1 → class 2

Ideal Alternatives(理想的替代品)

- Function (Model):

- Loss function:

- Find the best funcion:

Example:Perceptron , SVM

在这里我们用线性回归算法进行分类,我们把接近-1的数据定义为class2,把接近1的数据定义为class1,以此作为分类,可以看到当数据远大于1时,绿色的直线慢慢往紫色的直线靠近了,因此在某种情况下,用线性回归进行分类可能会不太准确。Naive Bayes

其中 mean μ 和 covariance matrix ∑ 是决定形状的两个因素,而不同的参数,会有不同的形状:

Probability from Class

那如何找到这个Gaussion function呢?

Summery

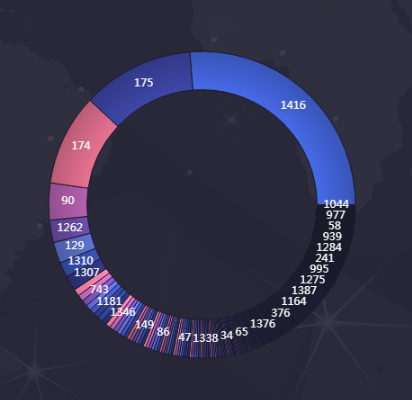

Probability Distribution

Logistic Regression

The steps of Logistic Regression

Logistic Regression VS Linear Regression

为什么不能用Logistic Regression+Square Error

Cross Entropy v.s. Square Error

Discriminative(Logstic) v.s. Generative(Gaussion)

Multi-class Classification

Limitation of Logistic Regression

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 我的技术小站!

评论