梯度下降算法实战

在上一篇文章中,我们了解了梯度下降算法的原理,那么在这一篇文章中,我们将结合李宏毅机器学习入门的课后作业1,用python来实现梯度下降。

课后作业1内容&数据集链接地址:https://ntumlta.github.io/2017fall-ml-hw1

载入数据

1 | import pandas as pd |

可以看到tranning set由这样的数据组成,我们的目标就是通过AMB_TEMP,CH4等一系列数据,预估出PM2.5的值。

数据清洗&处理

1 | del data['datetime'] #删除无用指标datetime |

经过一番处理之后,数据变成了这样的形式:

描述性分析

1 | plt.figure(figsize=(10, 6)) |

1

2

3

4

5

6

7

8

9

10

11

12

13plt.figure(figsize=(10, 6))

plt.subplot(2, 2, 1)

plt.title('NO')

plt.scatter(data9['NO'],data9['PM2.5'])

plt.subplot(2, 2, 2)

plt.title('NO2')

plt.scatter(data9['NO2'],data9['PM2.5'])

plt.subplot(2, 2, 3)

plt.title('NOx')

plt.scatter(data9['NOx'],data9['PM2.5'])

plt.subplot(2, 2, 4)

plt.title('O3')

plt.scatter(data9['O3'],data9['PM2.5'])

1

2

3

4

5

6

7

8

9

10

11

12

13plt.figure(figsize=(10, 6))

plt.subplot(2, 2, 1)

plt.title('WD_HR')

plt.scatter(data9['WD_HR'],data9['PM2.5'])

plt.subplot(2, 2, 2)

plt.title('WIND_DIREC')

plt.scatter(data9['WIND_DIREC'],data9['PM2.5'])

plt.subplot(2, 2, 3)

plt.title('WIND_SPEED')

plt.scatter(data9['WIND_SPEED'],data9['PM2.5'])

plt.subplot(2, 2, 4)

plt.title('WS_HR')

plt.scatter(data9['WS_HR'],data9['PM2.5'])

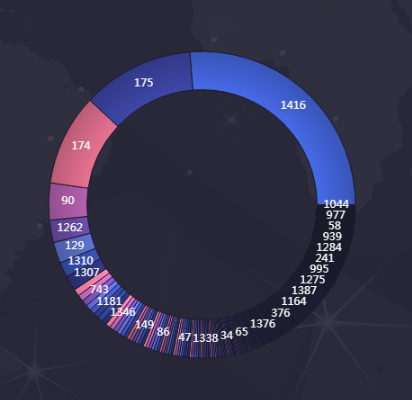

- 相关度计算

1

2

3

4

5internal_chars =['AMB_TEMP','CH4','CO','NMHC','NO','NO2','NOx','O3','PM10','PM2.5','RH','SO2','THC','WD_HR','WIND_DIREC','WIND_SPEED','WS_HR',]

corrmat = data9[internal_chars].corr() #计算相关系数

f , ax = plt.subplots(figsize = (20,12)) #设置图标尺寸大小

plt.xticks(rotation = '0')

sns.heatmap(corrmat, square=False, linewidths=.8, annot=True) #设置热力图参数

建模分析-预测PM2.5

其中loss = ‘epsilon_insensitive’ 表示用的最小二乘法,alpha = 0.01表示为初始的步长,max_iter=10000表示最大的迭代次数。最后模型的评分为0.62分,可能是数据量有点太少了,在以后的文章中,会继续把它优化!1

2

3

4

5

6

7

8

9

10X = data9[['PM10','NO2']]

y = data9[['PM2.5']]

scaler = StandardScaler()

X = scaler.fit_transform(X)

y = scaler.fit_transform(y)

X_train,X_test,y_train,y_test = train_test_split(X,y,random_state=1)

clf = SGDRegressor(loss='epsilon_insensitive',alpha=0.01,penalty='l2',max_iter=10000,shuffle=True,n_iter=np.ceil(10**6/8622))

clf.fit(X_train,y_train)

clf.score(X,y)